When you send a message on Telegram, you expect privacy. But what happens when that same message spreads illegal content to millions? That’s the core conflict tearing at Telegram’s foundation. Unlike Facebook or WhatsApp, Telegram doesn’t scan messages for illegal material. It doesn’t join global child safety coalitions. And until recently, it claimed zero responsibility for what users post in public channels with millions of subscribers. That stance is now being challenged in court - and the consequences could reshape how every messaging app operates.

Telegram’s Design Is the Problem

Telegram’s architecture isn’t an accident. It was built to avoid control. Cloud chats use client-server encryption, not end-to-end by default. Secret Chats are encrypted, but they’re optional and rarely used. Public channels? Unlimited subscribers. No moderation. No content filters. No reporting tools. This design lets users share anything - from news to scams to child sexual abuse imagery - without interference. And that’s exactly what regulators say makes Telegram dangerous.The Stanford Internet Observatory found Telegram failing to block known child abuse content even after it was reported. In Q3 2024, the Internet Watch Foundation recorded 384,000 reports of such material. Twelve percent came from Telegram channels. And Telegram isn’t even a member of the IWF - unlike WhatsApp, Instagram, and Snapchat. When Heidi Kempster, IWF’s deputy CEO, said, “There’s no excuse,” she wasn’t exaggerating. Telegram chose not to join. Not because it couldn’t. Because it didn’t want to.

The Durov Arrest Changed Everything

On August 24, 2024, Pavel Durov was arrested at a Paris airport. He was formally charged with six crimes, including complicity in distributing child pornography and drug trafficking. The French judge didn’t accuse him of posting illegal content. He accused him of building a platform that made it easy - and profitable - for others to do so.Durov was released on €5 million bail, but he can’t leave France. He must check in with police every two weeks. This isn’t a routine fine. It’s a criminal investigation targeting the founder of a tech company for the actions of its users. That’s unprecedented. In the U.S., Section 230 shields platforms from liability. In Europe, the Digital Services Act (DSA) doesn’t offer that protection. And Telegram, with an estimated 40-50 million users in the EU, was supposed to be a Very Large Online Platform (VLOP) - meaning stricter rules. But authorities suspect Telegram lied about its EU user count to avoid that label.

How Telegram Compares to Other Apps

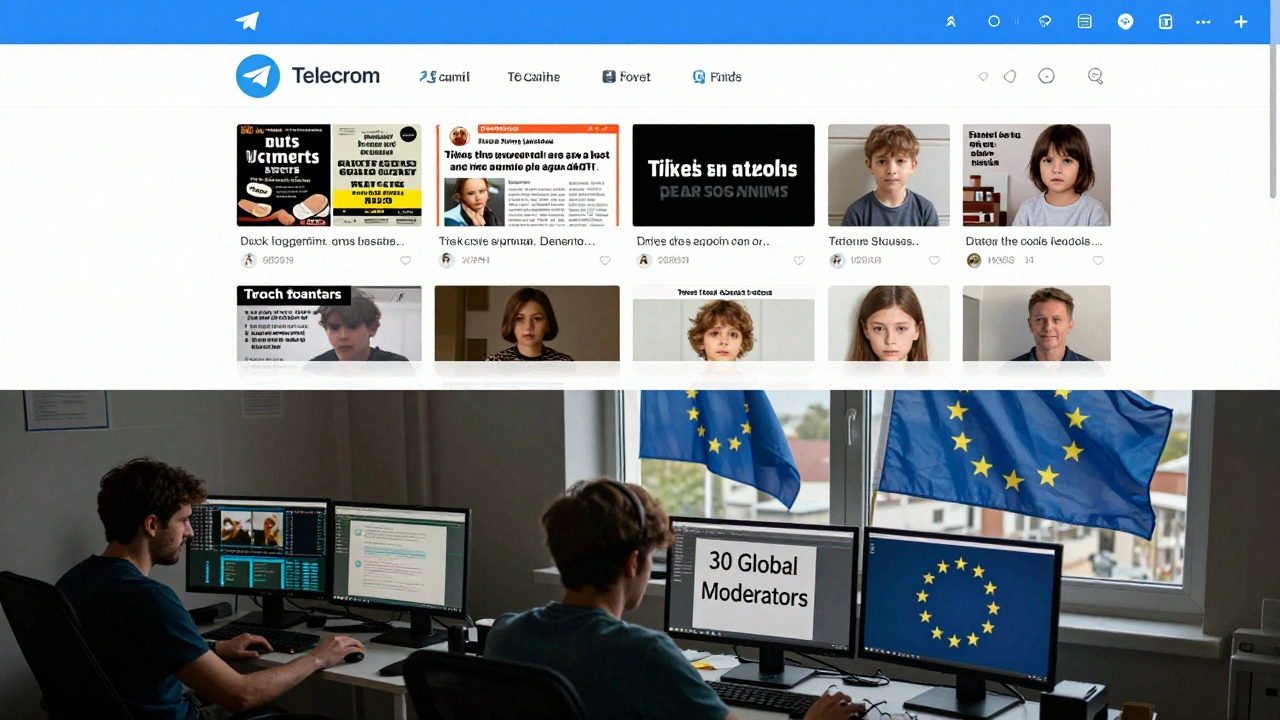

Compare Telegram to WhatsApp. WhatsApp processes 100 million user reports a year. It uses AI to detect child abuse material. It’s a member of the IWF. It works with law enforcement. Telegram? As of August 2024, it had about 30 content moderators globally. That’s fewer than most small startups. John Shehan from the National Center for Missing & Exploited Children called Telegram “in a league of their own” for ignoring child exploitation. And he’s not alone.

Signal, another encrypted app, doesn’t store messages or metadata. It can’t access content. But it still has reporting tools. It shares threat data with authorities. Telegram claims it can’t do the same - but that’s not true. Signal proves it’s possible. Developers on Hacker News pointed out: “Signal proves you can have strong encryption AND effective reporting mechanisms.” Telegram’s choice isn’t technical. It’s ideological.

Legal Gray Zones and Global Fallout

Europe’s DSA requires platforms to remove illegal content quickly and be transparent about how they do it. Telegram says it has “disclosed no user data to third parties.” That’s a direct conflict with GDPR’s Right to Erasure and Right of Access. If someone asks Telegram to delete their data, the company can’t comply - not because it’s technically impossible, but because it doesn’t collect enough data to trace it. That’s a violation.

Legal experts are divided. Professor Tarunabh Khaitan at Oxford says the Durov case could “reshape how we think about intermediary liability globally.” But David Kaye, former UN free speech expert, warns that criminalizing platform owners sets a dangerous precedent. Authoritarian regimes will use this to jail journalists or activists who run dissenting channels. The Law Society Gazette found 67% of EU tech lawyers believe this case will push all platforms toward stricter moderation. But 33% fear it will kill innovation in privacy tools.

What Telegram Did After the Arrest

After Durov’s arrest, Telegram made a few changes - but they’re small. It disabled media uploads on its blogging tool. It removed the “People Nearby” feature, which scammers used to target users. It shut down two major criminal marketplaces on its platform: Huione Guarantee and Xinbi Guarantee. These were hubs for fraud, counterfeit goods, and stolen data. Telegram says it acted because “these were clear violations.”

But here’s the problem: these changes came only after a founder was arrested. No proactive steps. No public policy update. No transparency report. No increase in moderation staff. The platform still doesn’t scan public channels. It still doesn’t join IWF. It still doesn’t share data with regulators unless forced by multiple court orders across different countries.

Users Are Split - And Losing Trust

On Reddit, users are divided. “PrivacyWarrior89” says: “I switched from WhatsApp after Durov’s arrest because I value real privacy.” But “SafetyFirstMom” replied: “My nephew found CSAM on Telegram last week - this isn’t about privacy, it’s about protecting children.”

Trustpilot ratings dropped from 4.2 to 3.1 stars after the arrest. Sixty-two percent of new negative reviews blamed “inadequate content moderation.” The platform’s reputation is crumbling - not because of hacking or outages, but because users realize: if you don’t care about safety, you’re not a platform. You’re a conduit for chaos.

The Bigger Picture: Can Telegram Survive?

Telegram has 900 million users globally. But its future depends on Europe. Goldman Sachs estimates a 40% chance of “significant EU market restrictions” within two years if Telegram doesn’t change. The EU can fine it up to 6% of its global revenue. It can block access. It can force app stores to remove it. And with 58% of EU citizens supporting stricter rules for encrypted apps, public opinion is turning.

Privacy advocates at the Electronic Frontier Foundation argue that encryption must be defended - even when abused. But they also admit: “Telegram’s approach isn’t about encryption. It’s about avoidance.”

The real question isn’t whether Telegram can be hacked or banned. It’s whether it can adapt. Can it build moderation tools without breaking encryption? Can it cooperate with authorities without betraying users? Can it keep its privacy promise and still be legal?

So far, the answer is no. And the clock is ticking.

Is Telegram legally responsible for what users post?

Under the EU’s Digital Services Act, platforms like Telegram can be held liable if they fail to act on illegal content - especially if they have over 45 million users in the EU. Telegram is suspected of underreporting its EU user count to avoid this. Unlike U.S. platforms protected by Section 230, Telegram has no legal shield in Europe. The arrest of Pavel Durov shows regulators now see platform owners as personally accountable for systemic failures in moderation.

Why doesn’t Telegram scan for child abuse content?

Telegram claims scanning private messages would break encryption. But it only uses end-to-end encryption in optional Secret Chats. Most messages are stored on its servers using client-server encryption, which it could scan. It chooses not to because it prioritizes absolute user control over safety. It also refuses to join the Internet Watch Foundation, a global coalition that helps platforms detect and remove child abuse imagery - a step taken by every major competitor.

Can Telegram be banned in the EU?

Yes. Under the Digital Services Act, the EU can order app stores like Apple and Google to remove Telegram if it violates rules on illegal content, transparency, or user safety. It can also impose fines up to 6% of global revenue. While outright bans are rare, partial restrictions - like blocking access to public channels or requiring moderation tools - are already being discussed by EU regulators.

How does Telegram’s moderation compare to WhatsApp or Signal?

WhatsApp has AI systems that scan for child abuse material and processes 100 million reports annually. Signal has reporting tools and works with authorities while keeping encryption intact. Telegram has about 30 global moderators and no automated detection. It doesn’t join industry coalitions. It doesn’t publish transparency reports. Its approach is passive - only acting after public pressure or legal threats. This makes it the least moderated major messaging app.

Does Telegram comply with GDPR?

No, not fully. GDPR requires platforms to let users access or delete their data. Telegram claims it discloses no user data to governments and stores minimal information - making it technically impossible to fulfill these requests. But this conflicts with GDPR’s data minimization rule: you can’t collect too little data to avoid compliance. Regulators see this as a deliberate loophole, not a technical limitation.

What happens if Pavel Durov is convicted?

A conviction would set a global precedent: platform founders can be criminally liable for user behavior if their design enables abuse. It could lead to more arrests of tech CEOs in Europe. It might force all encrypted apps to add moderation tools, even if they claim to be “privacy-first.” It could also scare investors away from encrypted startups, fearing personal legal risk. Even if Durov is acquitted, the case has already changed how regulators view platform responsibility.

Is Telegram still safe to use?

If you use Secret Chats and avoid public channels, your messages are still encrypted and private. But if you follow public channels, join groups, or use Telegram for news, you’re exposed to unmoderated content - including scams, hate speech, and illegal material. There’s no warning system. No filters. No alerts. You’re on your own. For many, that’s a trade-off they’re no longer willing to make.