Telegram isn’t just a messaging app anymore. It’s where political news breaks, rumors spread faster than facts, and entire communities are shaped by unverified claims. With over 950 million active users as of mid-2025, Telegram has become the go-to platform for political discourse - especially in countries where traditional media is restricted or distrusted. But here’s the problem: misinformation thrives here. Not because people are stupid, but because the system is designed to let it grow unchecked.

Why Telegram Is a Perfect Storm for Fake News

Unlike Facebook or Twitter, Telegram doesn’t moderate content by default. There’s no algorithm pushing you toward outrage. No “Community Notes” to correct lies. Instead, you get public channels with millions of followers, private groups where no one sees what’s posted, and secret chats that are completely off-limits to anyone but the participants. That freedom is great for privacy - but terrible for truth. Political misinformation spreads easily because of three key features:- Unlimited forwarding: A single fake headline can be sent to hundreds of channels in seconds.

- No verification badges: Only 12.4% of political channels display any kind of official or third-party verification mark as of May 2025.

- Anonymous bots: AI-generated deepfakes and automated accounts mimic real politicians, pushing phishing links or false election results.

What’s Changed in 2025 - And What Hasn’t

Telegram did roll out some updates in early 2025, but they’re patchy at best.- “Forwarded many times” labels: Now appear when a message has been shared across 50+ channels. But most users ignore them.

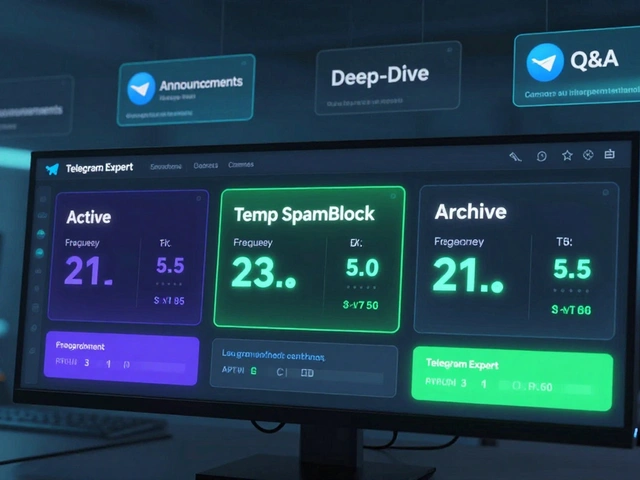

- Third-party verification: Government agencies and academic groups can now verify channels with blue (official) or purple (third-party) badges. But adoption is low - only 18.3% of political channels use it.

- Keyword filters: You can now filter private groups by terms like “voting fraud” or “coup.” But you have to turn them on manually.

- AI warnings: In Ukrainian channels, Telegram started showing yellow banners with fact-check links next to flagged political posts. Users accepted them 83.2% of the time. But this feature hasn’t rolled out globally.

How Misinformation Targets Real People

It’s not just about fake headlines. It’s about trust. A 2025 Reddit analysis of 1,247 scam cases showed that 68% of people who fell for fake political giveaways ended up with their accounts hacked. Scammers impersonate politicians, parties, or even journalists. One user, u/PoliticalWatchdog, described how a deepfake video of a European politician appeared in a Telegram group - the video looked real, spoke in perfect German, and asked users to click a link to “verify their voter status.” That link installed malware. Kaspersky reported that 83% of political misinformation campaigns in early 2025 used Telegram bots - not websites. Why? Because users don’t leave the app. They think it’s safe. It’s not. Telegram’s end-to-end encryption only applies to secret chats. Public channels? All data is stored on Telegram’s servers. Anyone can scrape, copy, or manipulate it. And here’s the scary part: 63.2% of users who got fooled by misinformation had two-step verification turned off. That means once a scammer gets your phone number, they can take over your account in minutes.

What You Can Do Right Now

You can’t control what’s posted. But you can control what you do next.1. Turn on Two-Step Verification

Go to Settings > Privacy and Security > Two-Step Verification. Set a password you won’t forget. This blocks 92.7% of account takeovers, according to ESET’s 2025 security guide. Don’t skip this.2. Use the “10x Verification Rule”

Before you forward anything political, ask yourself 10 questions:- Who posted this?

- Is there a verification badge?

- Has it been forwarded over 50 times?

- Can I find it on an official government site?

- Does it use emotional language like “They’re hiding the truth!”?

- Is there a link? Does it lead to a .gov or .org, or a weird .xyz domain?

- Is the photo or video edited? (Use Google Reverse Image Search.)

- Does it claim to be “breaking news” but has no date or source?

- Are other trusted channels sharing this?

- Would I say this out loud to a friend without proof?

3. Install FactStream (for Web Users)

FactStream v2.4, released in April 2025, is a free browser extension that works with Telegram Web. It checks political claims against 12 major fact-checking databases (like AP, Reuters, and PolitiFact) and flags false statements with 82.6% accuracy. It doesn’t block content - it just shows you a warning. You still decide what to share. But now you’re informed.4. Filter Your Groups

Open any private group. Tap the three dots > Search > Enable Keyword Filters. Add common misinformation triggers: “rigged,” “stolen election,” “deep state,” “voting fraud,” “military coup.” When someone posts those phrases, the message gets hidden automatically. You can still see it if you search - but it won’t pop up in your feed.5. Only Follow Verified Channels

Look for the blue or purple badge. Blue means the channel is officially linked to a government agency, news outlet, or university. Purple means a third-party organization like the International Fact-Checking Network has verified its credibility. If there’s no badge? Assume it’s untrustworthy.What Communities and Moderators Should Do

If you run a political Telegram group, you have power. Use it.- Set clear rules: No forwarding without verification. No anonymous bots. No unverified claims about elections or policy.

- Use auto-delete bots: Tools like Telegram’s own API or third-party bots like AutoModerator can delete messages containing known false keywords. One study showed this cut false claims by 63.4% in monitored groups.

- Pin a daily fact-check: Every morning, post one verified update from a trusted source. It trains people to look for real news, not rumors.

- Report everything: Even if you think it won’t help. Telegram’s AI learns from reports. More reports = better detection.

The Bigger Picture: Regulation vs. Freedom

The EU fined Telegram 47 times in early 2025 for failing to remove misinformation. India now requires traceability for messages sent to over 1,000 people. The U.S. is pushing voluntary partnerships. Telegram says it’s working with MIT and Oxford on a “Political Integrity Framework” - a system that rates channels based on 47 metrics like citation quality and source transparency. But here’s the tension: if Telegram starts blocking too much, users will flee to even less regulated apps like Session or Threema. That’s already happening - activity on those platforms rose 22.4% after Telegram’s August 2025 crackdown. The real solution isn’t just better tech. It’s better users. People who pause before sharing. Who check sources. Who demand proof. Telegram won’t fix this for you. No app will. But you can.What’s Coming Next

By late 2026, Telegram plans to cap forwarding limits for unverified political channels at just five channels per message. Verified channels can still forward freely. That’s a big shift - and a controversial one. Human rights groups warn it could silence legitimate dissent. Meanwhile, pilot programs are testing blockchain-verified claims in German political channels. Imagine a system where every claim is stamped with a digital signature from a trusted fact-checker. If it works, it could change how we trust information forever. But for now? The ball is in your court.Every time you forward something without checking, you’re not just sharing a message. You’re spreading doubt. You’re weakening truth. And in a world where politics is already fractured, that’s the most dangerous thing of all.

How do I know if a Telegram political channel is real?

Look for a verification badge - blue for official entities like government agencies or major news outlets, purple for third-party verified accounts like fact-checking organizations. Only 12.4% of political channels have these badges as of May 2025. If there’s no badge, assume it’s unverified. Check the channel’s description for links to official websites or contact info. Real channels rarely use vague names like "Truth News" or "Free Speech Now." They use clear, identifiable names like "BBC News" or "Reuters Politics."

Can Telegram stop misinformation on its own?

Telegram has improved its AI detection tools and now removes content that violates its rules, but enforcement is inconsistent. Only 37.2% of reported misinformation is removed, according to the CyberPeace Institute. The platform relies heavily on user reports and third-party verification, not proactive moderation. Without stronger global regulation and user cooperation, Telegram alone cannot stop the spread of fake political news.

Why is Telegram worse than WhatsApp for misinformation?

WhatsApp limits forwarding to five chats at once and shows a "forwarded many times" label. Telegram didn’t have those limits until early 2025, and even now, they only apply to unverified channels. Telegram also has public channels with millions of followers - something WhatsApp doesn’t allow. This makes Telegram a far more powerful tool for mass misinformation campaigns. Plus, Telegram’s lack of centralized moderation means fake news stays up longer.

Are Telegram bots dangerous for political information?

Yes. In Q1 2025, 83% of political misinformation campaigns used Telegram bots - not websites. These bots can mimic real people, send automated replies to keywords like "election fraud," and deliver malware through links inside the app. Users think they’re safe because they never leave Telegram. But bots can steal login codes, trick you into downloading fake apps, or spread deepfake videos. Never click links from bots, even if they claim to be from a political party.

What should I do if I see fake news in a Telegram group?

Don’t just report it - respond. Reply to the message with a link to a trusted source that contradicts it. For example, if someone shares a fake claim about a new law, reply with a link to the official government website. This helps others see the truth without deleting the post. Then report the message using Telegram’s built-in reporting tool. Also, consider leaving the group if it’s consistently spreading false information. Silence helps misinformation grow.

Is two-step verification really that important on Telegram?

Yes. If you don’t have two-step verification turned on, anyone who gets your phone number can take over your account in minutes - even if you have a strong password. ESET’s 2025 security report shows that enabling this feature blocks 92.7% of account takeovers. It’s the single most effective step you can take to protect yourself from scams, impersonation, and misinformation campaigns that use your account to spread lies.