By 2025, Telegram has become one of the most powerful platforms for spreading misinformation-not because it’s designed for it, but because it’s perfect for it. Channels with millions of subscribers can push the same false claim across borders in seconds. Traditional fact-checking teams, used to working with news articles and public tweets, can’t keep up. That’s where collaborative fact-checking networks powered by Telegram bots come in. These aren’t sci-fi tools. They’re real, running right now, and changing how misinformation is caught before it goes viral.

How These Networks Actually Work

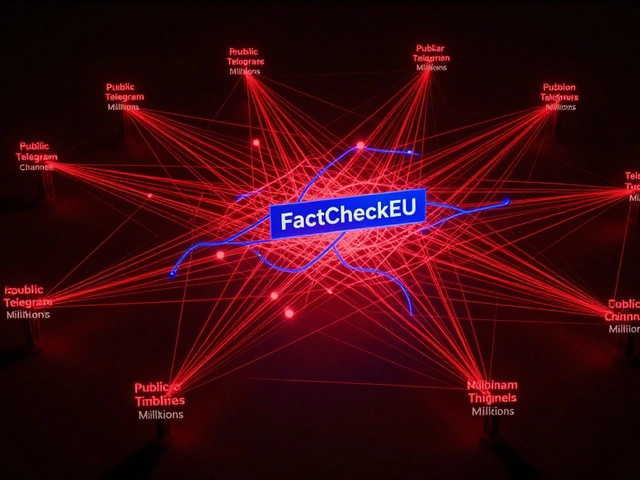

Think of a Telegram bot as a tireless assistant that never sleeps. It scans every message in a channel, looks for patterns, and flags anything suspicious. But here’s the twist: it doesn’t act alone. It passes the flagged content to a network of human fact-checkers-journalists, researchers, volunteers-who verify it in minutes. The bot handles the heavy lifting: sorting through thousands of messages, spotting duplicate claims, tracking how fast a lie spreads, and even identifying coordinated accounts that push the same false story across dozens of channels.

One of the most effective systems, developed by EPFL’s SPRING Lab, uses something called the Leiden algorithm to map out hidden networks of accounts. These aren’t random users. They’re often bots or coordinated human operators pushing propaganda. The system found that in some political Telegram channels, up to 5% of all messages were part of these campaigns. Without bots, it would take weeks to find them. With bots, it takes under 15 minutes to scan 50,000 messages.

Why Telegram? The Platform Advantage

Why not use Twitter or Facebook? Because those platforms lock down their data. Telegram doesn’t. It lets anyone scrape public messages, track view counts, and monitor how content moves from one channel to another. That’s huge. When a false claim about a new vaccine spreads from a Russian-language channel to a Spanish one, then to Arabic, the bot can trace that path. It sees how the same image or headline gets reshared with tiny tweaks-enough to fool algorithms that only look for exact matches.

Telegram’s view counter is another secret weapon. On other platforms, you don’t know how many people saw a post. On Telegram, you see exact numbers. If a lie gets 200,000 views in two hours, the bot knows it’s not just noise-it’s a threat. That’s why fact-checkers at AFP identified a viral mpox hoax in just 27 minutes during the 2023 outbreak. On other platforms, that might have taken days.

How Accurate Are These Bots?

They’re good-but not perfect. The Universidad de Navarra’s AI model, trained on over 247,000 examples of real disinformation, catches false claims with 92.3% accuracy in English. But drop the language to Arabic, and accuracy drops to 63.4%. Why? Because training data is mostly in English, Spanish, and Russian. Most systems don’t have enough examples from Southeast Asia, Africa, or Indigenous languages.

False positives are another problem. In politically tense situations, bots often flag legitimate criticism as propaganda. One fact-checker reported that 30% of flagged content needed human review just to avoid silencing real debate. That’s why hybrid systems exist: bots flag, humans decide. The EPFL system, for example, reduces moderation time by 80%, but still relies on experts to make the final call.

And then there’s the video problem. Short clips, especially those without clear audio or text, are hard for bots to analyze. A 15-second clip of someone pretending to be a doctor giving false medical advice? The bot sees a video file. It doesn’t know what’s being said unless someone transcribes it. That’s why EPFL’s new version 3.0, released in May 2025, added multimodal analysis-now it can analyze audio and visual cues together. Accuracy on video verification jumped from 32% to 78%.

Who’s Using These Tools?

Not big tech companies. Not governments. Mostly independent fact-checking groups, journalists, and academic teams. According to the Reuters Institute, 47% of professional fact-checking organizations now use Telegram bot networks-up from just 12% in 2021. In Europe and North America, it’s even higher. Organizations like AFP, Reuters, and local media in Germany and Poland rely on them daily.

Commercial tools like Check First, founded in 2021, offer subscription services to newsrooms. Open-source tools like the Telepathy toolkit are used by smaller groups and volunteers. GitHub has over 1,850 stars on the main repository, and there’s a Telegram channel with 4,200 members sharing tips, updates, and false claim reports.

But adoption is uneven. Journalists using tools like Telemetr and TGStat say they’re powerful-but only if you know how to read the data. Network maps showing which channels link to which can look like spaghetti. Without training, you can’t tell if a connection is meaningful or just noise.

The Arms Race Is Real

Disinformation actors aren’t sitting still. Dark web Telegram groups are actively building tools to fool these bots. They change wording slightly, swap out images, or delay posting to avoid detection windows. One campaign, called Operation Overloard, floods fact-checking services with fake verification requests-overloading them so real threats slip through.

Telegram itself doesn’t help. There’s no official API for fact-checking. Everything runs on reverse-engineered methods. And when Telegram updates its app-which happens every few months-those methods break. A Cyble survey in November 2023 found that 68% of users had integration issues after a major update. The best teams now build modular systems: if the Telegram API changes, they swap out one piece without rebuilding everything.

What’s Next?

The next big leap? Blockchain-based verification ledgers. Imagine every fact-checking decision recorded on a public, tamper-proof ledger. If a bot flags a claim and a human confirms it’s false, that decision gets added to the chain. Other networks can trust it without redoing the work. This is planned for late 2025.

Another idea: AI-powered source credibility scoring. Instead of just checking content, the system will rate the channel itself. Has it spread lies before? Does it copy content from known propaganda sources? Is it only active during elections or crises? That’s coming in 2026.

But the biggest question is sustainability. Telegram’s policies could change tomorrow. They could block scraping. They could limit API access. According to a September 2024 survey by the International Fact-Checking Network, 73% of users cite inconsistent API access as their biggest risk. If Telegram decides to shut this down, the whole system could collapse.

Can You Build One?

Yes-but it’s not easy. You need:

- Basic Python skills

- Understanding of NLP (natural language processing)

- Access to Telegram’s Bot API

- Computing power to process 1,000+ messages per minute

- A database of verified false claims (like the Conspiracy Resource Dataset with 15,000+ URLs)

EPFL’s open-source tools have the best documentation-rated 4.3/5 on GitHub. Commercial tools? Often poorly documented. One user on Trustpilot called Check First’s guides “opaque.”

Setup takes 2-3 weeks if you’re technical. If you’re not? You’ll need help. Many journalism schools now offer workshops on Telegram bot networks. The Journalism Festival in March 2025 trained over 200 reporters from 30 countries.

The Bottom Line

Collaborative fact-checking networks powered by Telegram bots aren’t magic. They don’t replace journalists. They don’t fix the root problem of misinformation. But they give people a fighting chance. In a world where lies spread faster than truth, these networks are the closest thing we have to an early warning system.

They’re fast. They’re scalable. They’re built by people who care-not by corporations trying to maximize ad revenue. And right now, they’re the only thing standing between a viral lie and a public panic.

Will they last? Maybe not. But until something better comes along, they’re the best tool we’ve got.