Imagine getting answers to your questions about the city council vote, a local fire, or the school budget not from a reporter on deadline, but from a chatbot that knows exactly what you’re asking-and answers in seconds. That’s not science fiction. It’s happening right now in newsrooms from small towns in Ohio to conflict zones in Eastern Europe, all powered by AI bots running on Telegram.

Why Telegram? Because Speed Matters

Telegram isn’t just another messaging app. For journalists, it’s the fastest wire service on earth. A report from arXiv in October 2025 found that breaking news appears on Telegram 3 to 5 hours faster than through traditional wire services like AP or Reuters. In places where press freedom is limited, or during disasters when phones go down, Telegram channels become the first place people post photos, videos, and eyewitness accounts. Newsrooms didn’t invent this-they adapted to it. That’s why bots on Telegram aren’t just convenient. They’re necessary. A bot can monitor 20+ news channels at once, filter out noise using simple regex patterns, and summarize what’s real before a human even wakes up. The TeleFlash system, used by journalists covering Ukraine and Sudan, pulls in raw Telegram posts, strips out spam, and sends a clean 3-line summary to a reporter’s Slack. No more scrolling for hours. No more missing the first alert.How These Bots Actually Work

Most Telegram newsbots follow a similar blueprint. They start with the Telegram Bot API, which lets developers create a bot that can receive and send messages. Behind the scenes, there’s a Python backend-often built with FastAPI-that handles incoming questions, remembers past chats, and pulls in real-time data from news APIs like CNN, Reuters, or local government feeds. Take Newsmate, a bot profiled by Substack in May 2025. It doesn’t just answer questions. It learns. If you ask about school board meetings every Tuesday, it starts sending you a digest every Monday. If you live in Dallas and ask about road closures, it ignores national news and focuses only on local sources. It stores your preferences, tracks your questions, and even remembers when you asked about the same topic last month. Behind the scenes, an LLM (like GPT-4 or Claude 3) turns raw text into human-sounding replies. But here’s the key difference from ChatGPT: these bots are trained only on verified news sources. They don’t pull from Reddit, Twitter, or random blogs. They’re fed content from the newsroom’s own archives, approved wire services, and public records. That’s why The Washington Post’s bot, Ask the Post AI, can say, “This answer is based on our reporting from November 4, 2024,” and not just guess.What These Bots Can (and Can’t) Do

Let’s be clear: these bots aren’t journalists. They’re assistants. They’re great at:- Answering factual questions: “When is the next city council meeting?”

- Summarizing long articles: “Give me the key points from the school budget vote.”

- Fetching archived content: “Show me all stories about the river cleanup in 2023.”

- Delivering personalized updates: “Send me updates on the new library construction.”

- Time-sensitive questions: 37% of requests for “latest updates” fail because the bot’s data is 20 minutes old.

- Complex follow-ups: “Why did the council vote yes, and what did the mayor say in private?”-bots often hit a wall.

- Non-English queries: 68% of journalists in the arXiv study said bots perform poorly with Spanish, Arabic, or Ukrainian content.

- Verifying rumors: If someone posts a photo of a fire on Telegram and says “the whole neighborhood burned down,” the bot can’t tell if it’s real. That’s still up to a human.

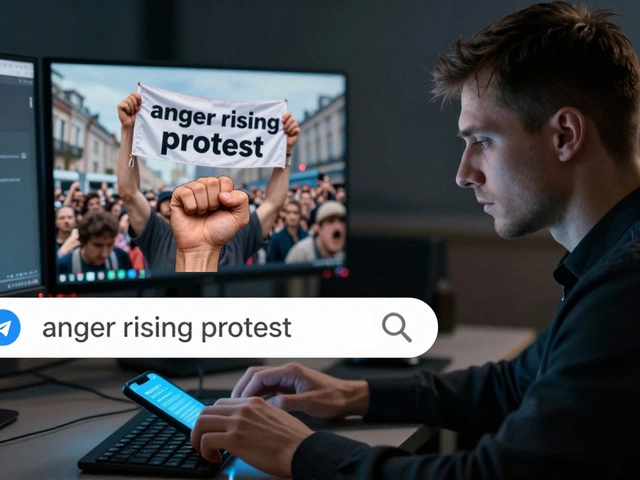

Why Newsrooms Are Choosing Restricted Bots Over Free-Form AI

You might think: “Why not let users ask anything?” But the biggest newsrooms aren’t doing that. Spotlight PA built an election bot that only answered 12 pre-approved questions. No open-ended chats. No guessing. Just facts. Result? Misinformation dropped by 81%. The Washington Post’s bot doesn’t say, “I think…” or “Maybe…” It says, “Based on our reporting from November 4, 2024, the results were certified at 8:32 p.m.” Why? Because trust is fragile. A 2025 report from the University of Minnesota’s Hubbard School found that 63% of Americans don’t trust AI-generated news. And if a bot gives a wrong number on the city budget, people won’t blame the bot-they’ll blame the newspaper. So newsrooms are choosing control over creativity. They’re building “FAQ bots,” not “ask me anything” bots. And it’s working. The Local NewsBot Studio found that FAQ bots got 78% user satisfaction. General knowledge bots? Only 43%.Who’s Using This-and How Much Does It Cost?

It’s not just big names. The Washington Post, Forbes, and the Financial Times have bots. But so do tiny outlets. A small paper in rural Wisconsin built its bot in 22 days for $3,800. A college newspaper in Michigan spent $1,200. The tools? Python, Telegram’s free API, and open-source LLMs like Mistral. You don’t need a team of engineers. You need one person who can code, and the will to try. The learning curve? About 80-100 hours of developer time for a basic bot. The biggest hurdles? Telegram’s rate limits (20 messages per minute per bot) and getting access to local government data feeds. Most newsrooms don’t have APIs for their own archives. That’s the real bottleneck. But the payoff? Engagement. In the Local NewsBot Studio study, 44% of small newsrooms saw a spike in audience interaction. People weren’t just reading-they were asking. And coming back.The Big Trade-Off: Speed vs. Accuracy

The biggest question isn’t “Can we build this?” It’s “Should we?” Journalists are caught between two pressures: the need to move fast, and the duty to get it right. A bot that answers in 10 seconds but gets the population number wrong is worse than one that says, “I can’t answer that.” That’s why the smartest bots now say things like: “This tool only pulls from verified sources like the city’s official website and our own reporting. For deeper context, call our newsroom at 555-0123.” It’s not perfect. But it’s honest.

What’s Next? Hybrid Journalism

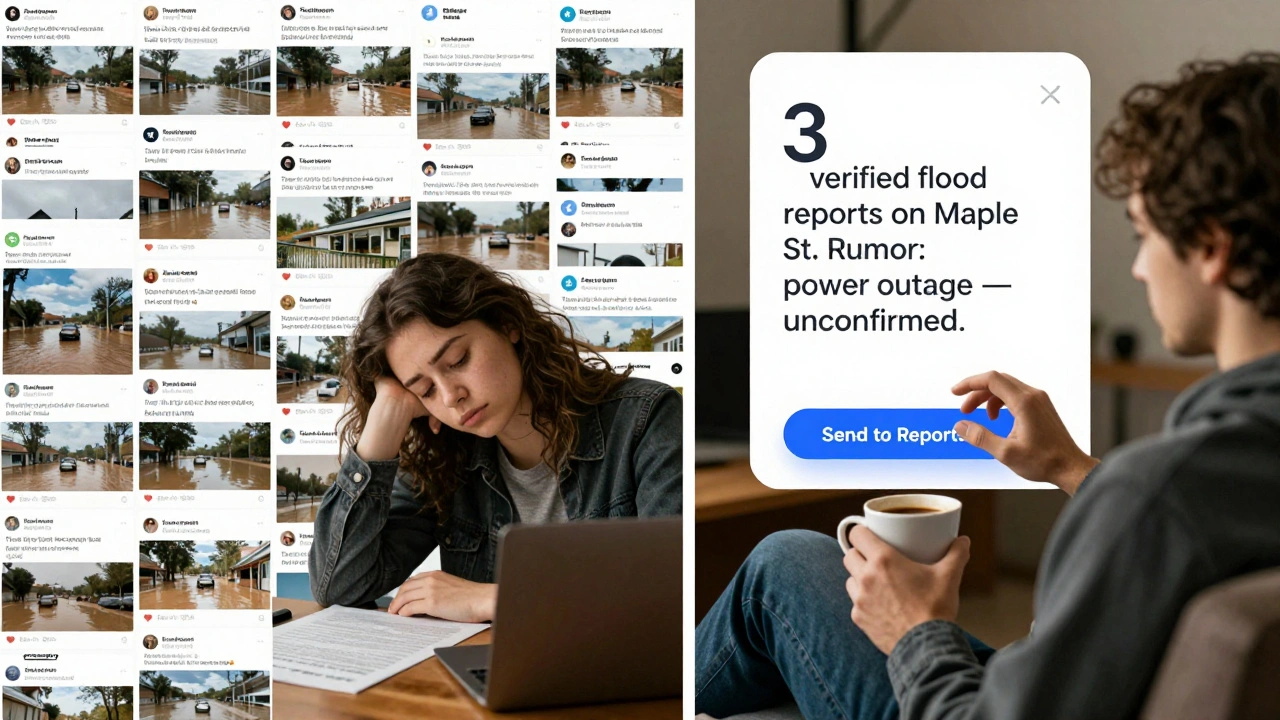

The future isn’t bots replacing reporters. It’s bots helping them. Imagine this: a bot scans 50 Telegram channels during a storm. It flags three posts with photos of flooded homes, two with calls for help, and one with a fake rumor. It sends a summary to a reporter: “Three verified reports of flooding on Maple St. Rumor: power outage in downtown. No confirmation.” The reporter calls the fire department. Confirms. Publishes. Saves lives. That’s the model TeleFlash is already using. The bot does the grunt work. The human does the judgment. And that’s where the real power lies. Not in replacing journalism-but in restoring it.Why This Matters for Local News

Local news is dying. Newsrooms are shrinking. Readers are leaving. But Telegram bots are bringing people back-not because they’re flashy, but because they’re useful. A 2025 Reddit post from a user named u/NewsGeek2025 said: “My local paper’s bot helped me find archived election coverage I’d been searching for months.” That’s not a viral tweet. That’s a person who felt heard. When a bot answers a question in 12 seconds, it doesn’t just save time. It rebuilds trust. One small interaction at a time.Final Thought: It’s Not About the Tech. It’s About the Relationship.

AI doesn’t care about your town. But a bot programmed by your neighbor, trained on your local papers, and designed to help you find the truth? That’s different. This isn’t about replacing journalists. It’s about giving them back the time they lost to noise. The time to verify. To investigate. To write. The bots aren’t the future of journalism. They’re the tools that let journalism survive.Can Telegram bots replace human journalists?

No. Telegram bots are tools for filtering, summarizing, and delivering information-not for reporting, investigating, or providing context. They handle repetitive tasks so journalists can focus on deeper stories. A bot can tell you when the city council met, but only a reporter can explain why the vote mattered.

Are Telegram newsbots accurate?

They’re only as accurate as their data. Well-built bots pull from verified sources like official government sites and trusted news feeds. But they still get things wrong-32% of responses are “I don’t know,” and 37% fail on time-sensitive queries. The best bots admit their limits instead of guessing. Trust comes from honesty, not perfection.

How much does it cost to build a Telegram newsbot?

As little as $1,200 for a basic FAQ bot. The Local NewsBot Studio found that small newsrooms can deploy functional bots in under 30 days for under $5,000. The biggest cost isn’t software-it’s developer time. Expect 80-100 hours of work for a simple setup. Telegram’s API is free, and open-source tools like Python and Mistral keep costs low.

Why not use ChatGPT or Google Bard instead?

Because they don’t control the source. ChatGPT can pull from anywhere-including unverified social media. Newsroom bots are trained only on approved content: their own archives, wire services, and public records. That means attribution is clear, and misinformation risk is lower. Plus, bots on Telegram can be updated daily with local news. General AI can’t.

Do readers trust these bots?

Not yet. Only 28% of American adults trust AI-generated news, according to a 2025 University of Minnesota study. But trust rises when bots are transparent. The Washington Post’s bot scores 3.7/5 because it cites sources. Bots that say “I don’t know” or “This is from our November 4 report” earn more trust than those that pretend to know everything.

Is this just for big newsrooms?

No. In fact, small local newsrooms are leading the way. The Local NewsBot Studio found that 44% of small outlets saw increased audience engagement after launching a bot. With low costs and simple tools, even a one-person newsroom can build a bot in a month. The barrier isn’t money-it’s knowing where to start.

What’s the biggest risk of using AI in newsrooms?

The biggest risk is eroding trust by pretending the bot is infallible. If a bot gives a wrong number and the newsroom doesn’t correct it, readers lose faith-not just in the bot, but in the whole organization. The solution? Transparency. Always say where the answer came from. Always admit limits. And always have a human check before publishing anything important.